Hi Brent,

Do you have a sense of why some records are being dropped? Is it some type of bug in the other system where the query results are not accurate? Maybe it’s academic, but I’m curious.

I suggest using Lookup Table actions as a way to access shared data across automations, using the “my account” scope.

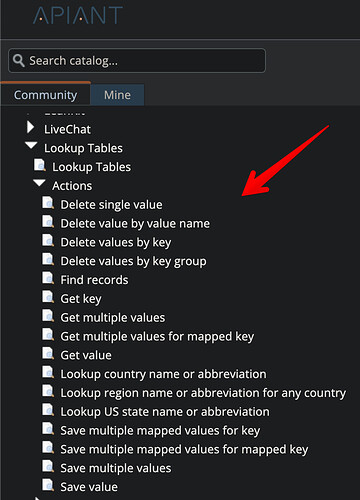

I have loaded additional actions into the Lookup Tables app into your main and dev systems (not Xanedu yet):

Some of these actions can be dragged from the assembly editor catalog and used directly in your assemblies (the ones with static input fields defined in their action module).

A high-level design is something like this:

Your existing automation would be modified to also write record ids into the database in the “my account” scope. Each entry would include an epoch timestamp.

A copy of that automation would audit those entries by fetching the records for a certain period of time defined by a range of epoch timestamps. Then it would fetch data from the source API that equates to the same time range. Then it would filter out the record ids already in the database. Then it does the normal processing, including the Emit New Items trigger module. That way the audit automation only processes missed records once.

The nuts and bolts of how to do that would be something like this:

First make a copy of the source trigger assembly. The copy will become the audit trigger.

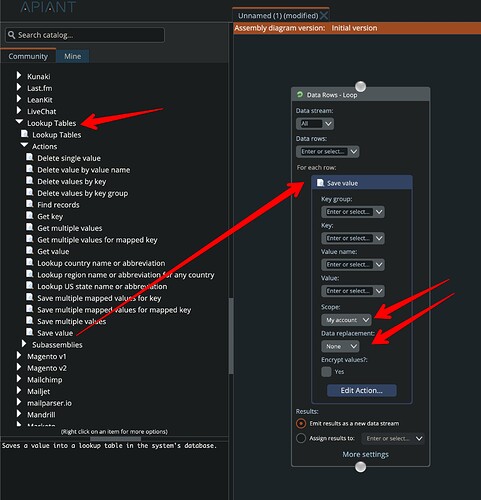

In the original trigger assembly add a Loop containing the “Save value” action to iterate through the fetched records and store the record ids in “my account” scope. The “value” will be the epoch timestamp and the “value name” will be the record id. Make up anything for the “key” and “key group” names. I don’t think it needs to do any data replacement, so choose “none”.

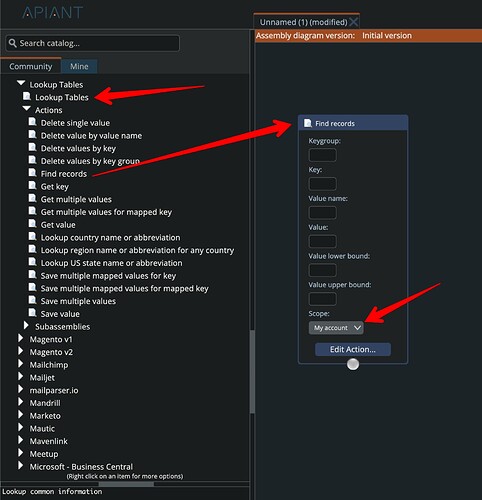

Then in the copied audit trigger assembly, use this module to fetch records based on a start and end epoch timestamp. The key and keygroup would be the contrived values you use in the other trigger. Use “my account” scope. The “value lower bound” is the starting epoch time, the “value upper bound” is the ending epoch time. That will return records in a time range:

Then you make your API call to fetch all records in the same time range and filter out results that are already in the database results. The ones that remain are those that were missed and need to be processed. It will still use the Emit New Items trigger module so it only processes the missed items once.

Other solution designs are possible, but this technique seems to be the best to try first w/ relatively little effort involved.

Long story short, the Lookup Table actions can be used to share data across automations and even accounts.