Sorry, I needed to update the license key as well.

You should have access now, reload the assembly editor first.

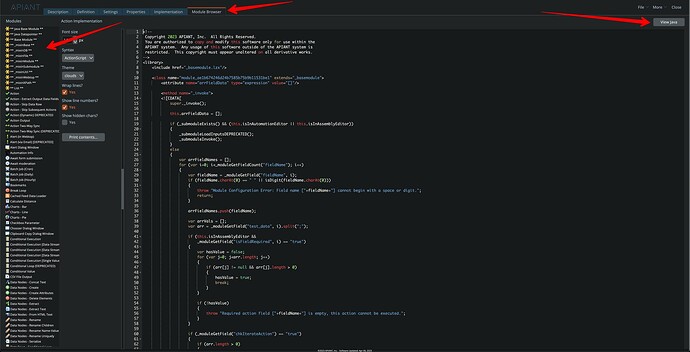

The LZX syntax is basically JavaScript. That is used when the module executes in the browser, either in the assembly editor or automation editor. The Java implementation is only used server-side.

Many modules have almost identical LZX & Java implementations. The Module API was designed so that the code looks as similar as possible for the browser and server. Many times the Java code just differs in terms of adding type info to variables.

The easiest way to get started is to examine existing modules and to clone modules that are close to desired functionality.

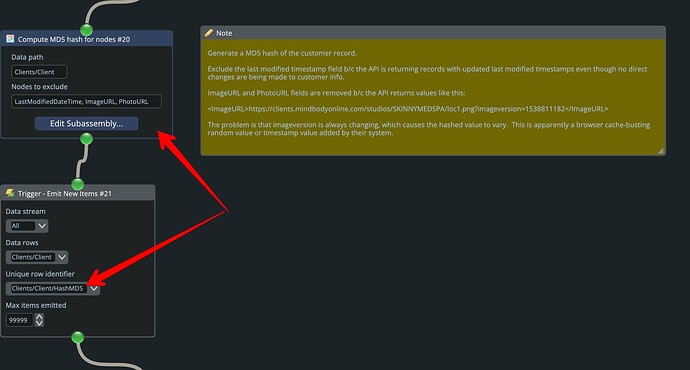

Data stream processing modules all have a certain boilerplate pattern, in that they validate their configuration, then have two loops that iterate through input wires and then through data streams within each wire. (Almost all modules only have a single wire as input, however.)

Suggest that you first examine an easy data processing module to help understand the code. The Math Hash Functions looks like a good example to examine first. Check out both its LZX and Java implementations. You can quickly examine module code with the Module Browser tab. The items at the top of the catalog comprise the Module API classes.

Develop and test on your dev server. Then publish to your production server when ready (via the “publish” right-click menu in the catalog).

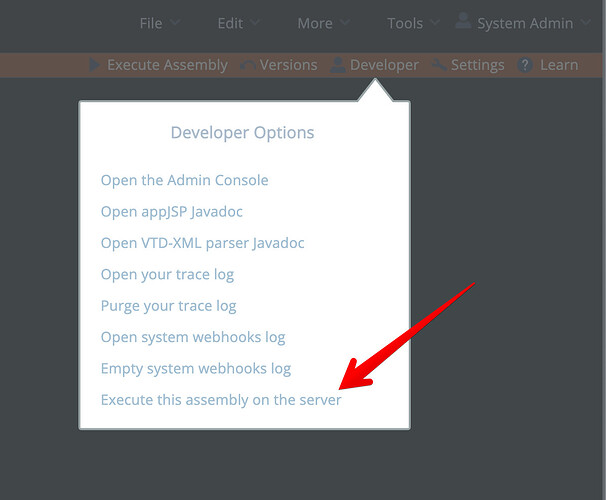

The best development approach is to first get the LZX code working in the browser by running the module in an assembly (use debug mode as needed to trace your code, or console.log) then after the LZX code is working translate it to Java (mostly copy-paste and add type info to variables). Run the assembly on the server to test the Java code:

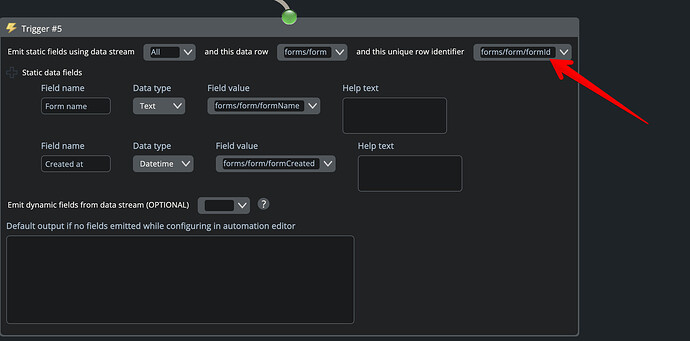

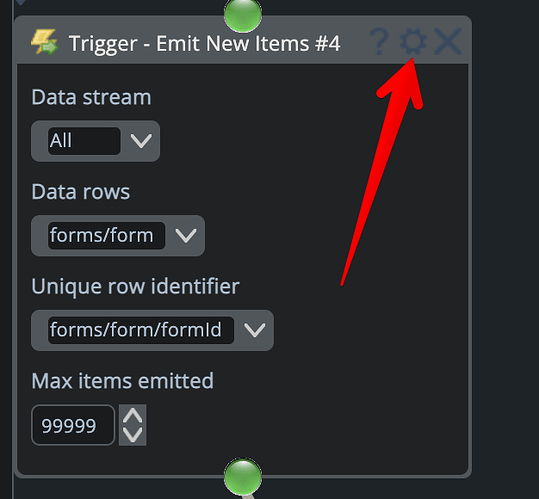

Your cloned Trigger - Emit New Items module would need to be tested within an automation. Its LZX code does very little b/c it has no real purpose when running in the browser other than to facilitate assembly building.

The “Server-Side Script” module and inline Java/PHP is most often used to extend system functionality in assemblies, but there are times when customizing/building modules is easier and better. There is a runtime performance benefit to using modules b/c inline code and all the input XML has to either be compiled or interpreted on the fly.

Let us know as you need help!